Architectural requirements

You need to decide which architecture your Tachyon system will use, and which configuration options. Your architectural decisions will include the types of Tachyon servers you will need, and how many, as well as how you will provide SQL services.

Tip

This documentation covers installation on-premises and on your own cloud-based servers. Alternatively, please contact your 1E Account team if you wish to use 1E's SaaS solution and avoid having to install and maintain servers.

SQL Server can be local or remote from your Tachyon servers.

Tachyon supports the following High Availability (HA) options for SQL Server:

AlwaysOn Availability Group (AG) using an AG Listener

Failover Cluster Instance (FCI)

Tachyon Setup is a configuration wizard which must be run on each server in your architecture, to install the required components. Tachyon Setup supports the following types of Tachyon server:

All components on a single server - a single-server installation comprises Master Stack and Response Stack, which is the most common choice for a system of any size supporting Tachyon real-time features.

Master Stack - you would install a Master Stack on its own if you do not require real-time features, or you want one or more remote Response Stacks.

Response Stack - this choice allows you to install a Response Stack after you have completed a single-server installation or installed a Master Stack, and is required to support Tachyon real-time features.

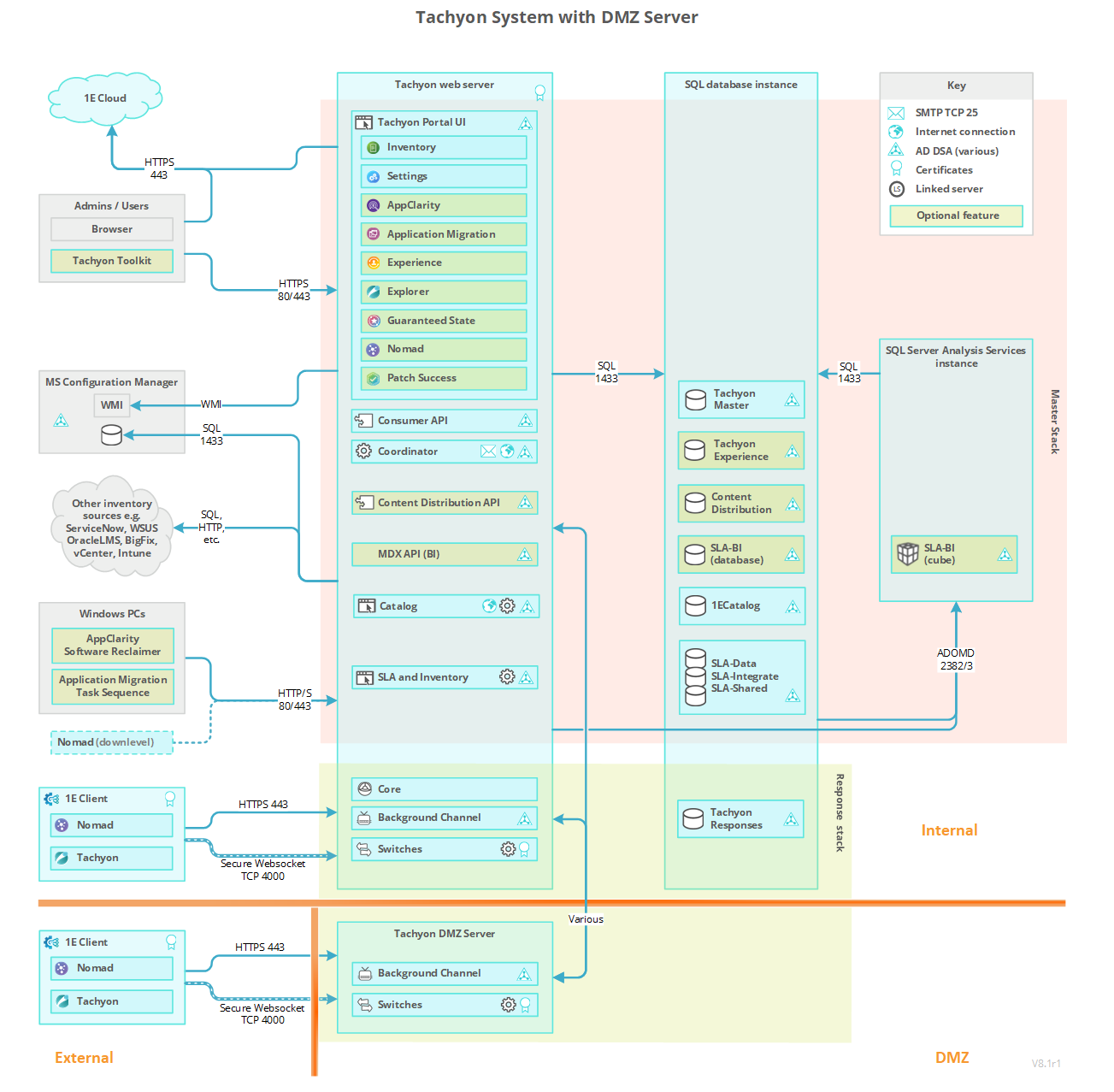

DMZ Server - this choice is used on when installing a DMZ Server to provide real-time features for Internet-facing clients. For design and configuration steps, please refer to the Implementing a Tachyon DMZ Server page.

The number of devices you need to support influences the number of Switches and Response Stacks you will need, and their server specifications. Each Switch can handle up to 50000 devices, and you can have up to five Switches on a single Response Stack server, handling a maximum of 250000 devices.

Multiple Response Stacks provide a degree of high availability, but are not intended for that purpose. Instead, Response Stacks are required for security, geographic or other network reasons. For example, because your organization covers multiple geographies and you do not want 1E Client traffic to go beyond the boundaries of each region, or because you have Internet-facing devices and need a Tachyon DMZ Server on-premises.

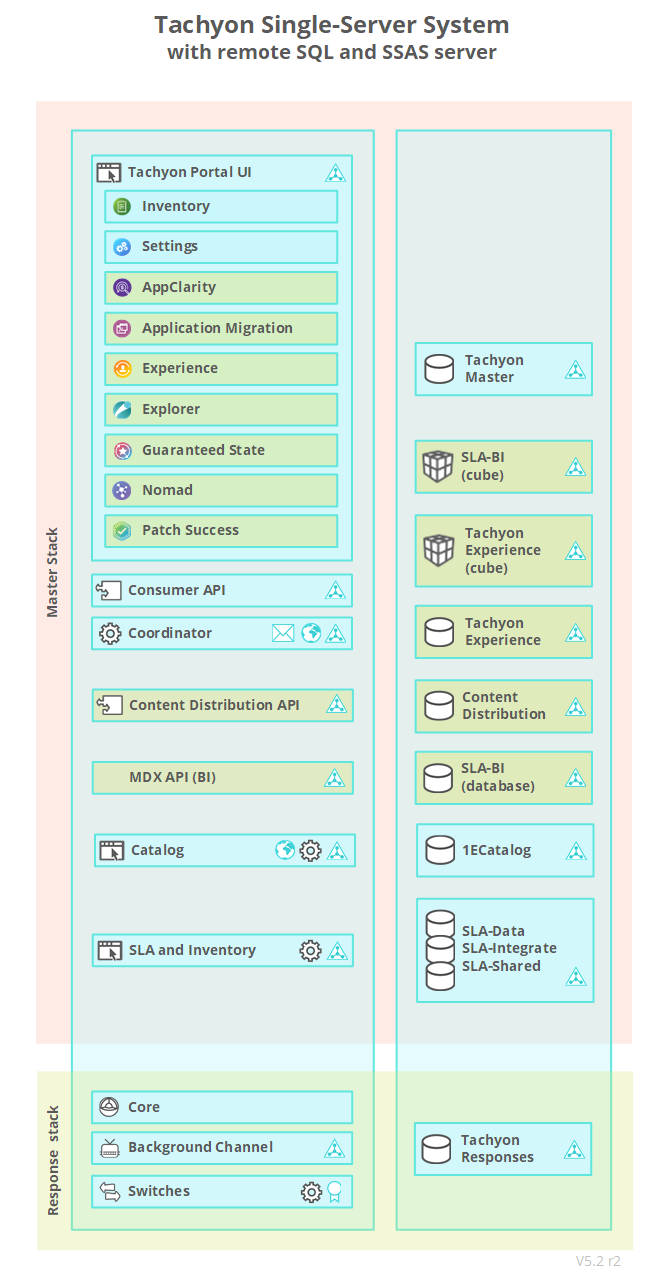

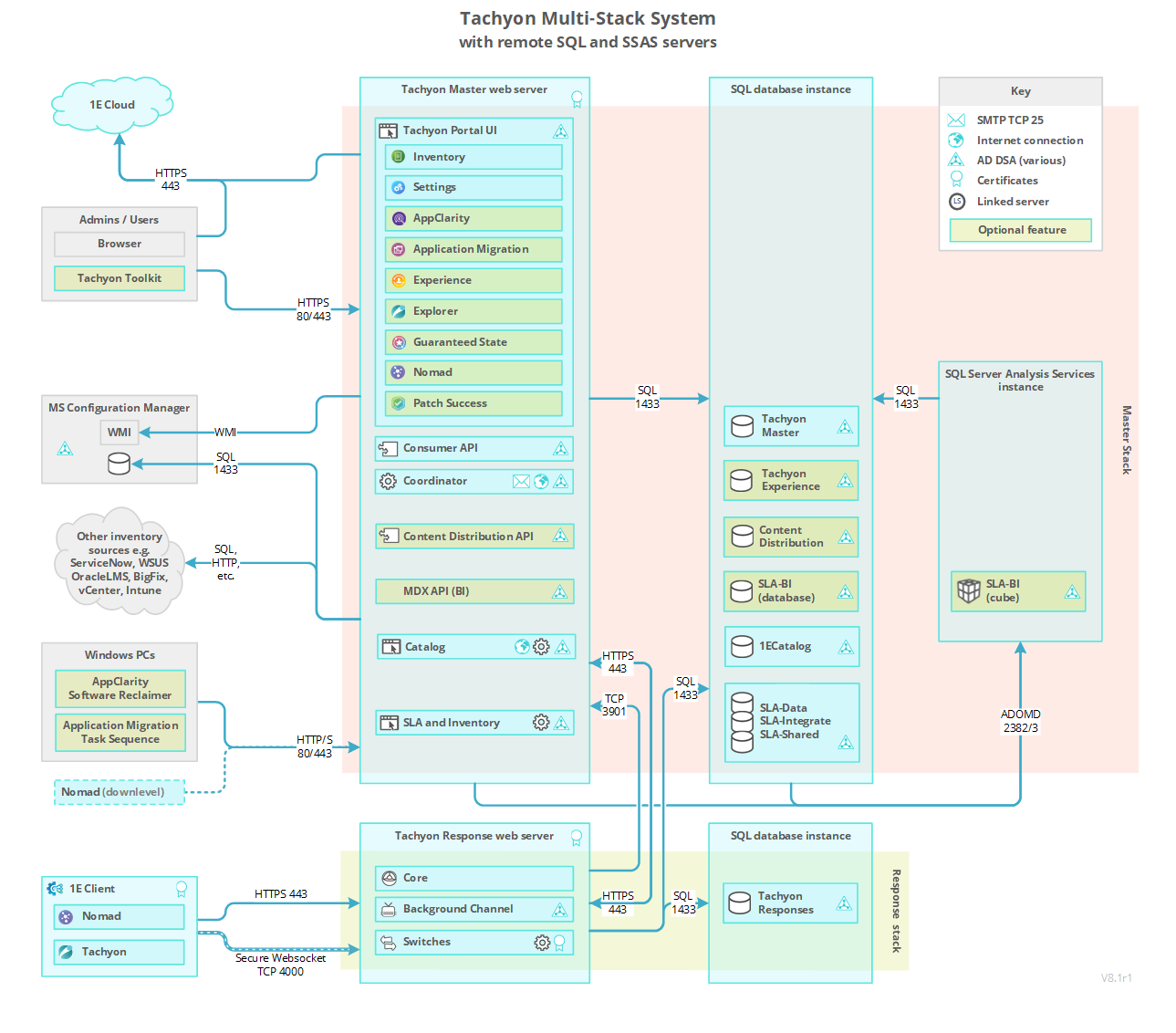

Tachyon Architecture

The pictures below show the most common architectural implementations of Tachyon that are supported by Tachyon Setup. Organizations with less than 50000 devices will typically have a single-server system with one Switch, but there may be reasons why a more complex configuration would be required. Key factors are the location of servers and how devices and users will connect to them.

Every Tachyon system has a single Master Stack, which provides web services for Tachyon applications. Master Stack components are typically all installed on a single Master Server.

Tachyon real-time features require Response Stacks, which are made up of one or more Response Servers. A DMZ Server is an example of a Response Server. Each Response Stack has at least one Background Channel for sharing resources, and a single Core component that supports an associated set of up to five Switches. Switches are the primary mechanism for rapidly requesting and retrieving responses from the clients. As each Switch can handle up to 50,000 devices there is a limit of 250,000 devices per Response Stack. Higher numbers can be achieved if you contact 1E for guidance. Switches may be local or remote to the other components in the Response Stack.

Databases for Tachyon, 1E Catalog, Nomad, Experience, SLA and BI, are installed on SQL Server database instance(s) that may also be local or remote to their respective Master and Response servers. It is also possible for multiple Response Stacks to share the same Responses database. The Cube used by Patch Success (SLA-BI) is installed on a local or remote SQL Server Analysis Services (SSAS) instance.

The pictures of the single-server and internet-facing configurations each show a system with a single Response Stack. The internet-facing system is special because the components of its Response Stack are split over two servers. Splitting a stack across multiple servers is not usually necessary except in special circumstances, for example the Switch and Background Channel on a DMZ Server is remote from its Core. The DMZ picture shows a dual firewall design, but single firewall is also supported.

The databases for Tachyon Master and Response Stacks can exist on local or remote installations of SQL Server. Local is the simplest implementation. A local Responses database offers the best performance, whereas a remote Responses database requires an additional network interface and network routing explained in Network requirements.

Tachyon can be installed on-premises on physical and virtual servers, and also on AWS and Azure cloud servers.

For an explanation of Tachyon components, please refer to Tachyon components.

Note

Click on a picture to enlarge. Click on a link to take you to the relevant part of the Tachyon Architecture page for more detail along with a description of the various components.

Components colored green are optional when installing a Tachyon system.

Tachyon configuration options

Tachyon Setup configuration wizard has several standard options described above. There is also a custom installation option to support different configurations to those described.

Note

Please contact your 1E Account Team if you need help with this.

SQL Cluster and SQL Always On are supported, but require additional steps, described in High Availability options for SQL Server.

Note

Please contact your 1E Account Team if you need help with this.

Tachyon architecture for Internet-facing devices

1E Business Intelligence

Business Intelligence is an optional component installed by Tachyon Setup on the Master Stack, and requires SQL Server Analysis Services (SSAS). Business Intelligence is a prerequisite for the Patch Success application to support efficient presentation of visualizations on a large scale.

Applications and Tools

Tachyon applications and tools are consumers, and all consumers connect to the Tachyon Consumer API.

The following consumer applications run on Tachyon platform and are installed using Tachyon Setup. Please also refer to the License requirements page.

Applications are categorized as follows:

Configuration - applications required to configure Tachyon platform and required by all other applications

Client - applications that communicate with devices in real-time

Inventory – applications that use inventory features

Applications that are included in the Tachyon Platform license

Application | Purpose | Type |

|---|---|---|

View and export inventory, and manage associations. It uses the SLA databases. | Inventory, configuration | |

Configure and monitor platform features. It uses the Tachyon Master database. | Configuration | |

Ensure endpoint compliance to enterprise IT policies. It uses the Tachyon Master and Responses database. | Client | |

Investigate, remediate issues and manage operations across all your endpoints in real-time. It uses the Tachyon Master and Responses database. | Client |

Applications that require a license

Application | Purpose | Type |

|---|---|---|

Manage software license compliance, License Demand Calculations, license entitlements, and for reclaiming unused software. It uses the SLA databases. | Inventory | |

Intelligently automate the migration of applications during a Configuration Manager OS deployment. It uses the SLA databases. | Inventory | |

Manage content distribution across your organization using a centralized dashboard. It uses the Content Distribution database. 1E Client requires Tachyon and Content Distribution features to be enabled on all clients. Content Distribution replaces the Nomad features of ActiveEfficiency Server, which is deprecated. Legacy Nomad clients continue to be supported by direct communication with the Content Distribution API, whereas Nomad 8.x clients communicate with the Content Distribution API via the proxy feature on Response Servers. Tachyon Setup Setup is used to install Content Distribution, and the Nomad app, and no longer installs ActiveEfficiency. Nomad uses Content Distribution for the following features:

| Client | |

Reports on and ensures successful patching of your enterprise. It uses the SLA and BI databases. Tachyon Setup indstalls SLA Business Intelligence, which requires SQL Server Analysis Server. Patch Success also uses Inventory connectors to get meta-data for patches from whichever of the following that you use to approve patches:

| Inventory | |

Measure performance, stability and responsiveness for applications and devices to assess user experience across your enterprise. It uses the Tachyon Master and Tachyon Experience databases. | Client |

The following types of application require 1E Client (with Tachyon features enabled) to be deployed to all in-scope devices.

All Client applications

Inventory applications if you intend using the Tachyon connector to populate your inventory.

The AI Powered Auto-curation feature is optionally used by Inventory applications to provide automatic curation of new products. This avoids having to manually add products to the 1E Catalog, or wait for it be updated. This optional feature requires additional memory on the Tachyon Server (Master Stack). Please refer to AI Powered Auto-curation: Memory requirements for details.

Some benefits of using AI Powered Auto-curation are that you can:

Achieve significantly more normalized software from the first sync

Reduce the manual effort required to normalize software

Get an expanded SAM offering as more data is available for AppClarity

Get additional coverage for Application Migration

Identify more software to review for security threats.

Tachyon Tools

The following are tools included in Tachyon platform. These tools are not installed using Tachyon Setup. They have either their own installers or are included in download zips.

Tachyon ConfigMgr UI Extensions - installed as part of the Tachyon Toolkit, this is a right-click extension for the Configuration Manager console that provides the option to browse and run an instruction on devices in a specified Collection, refer to Preparing for the Tachyon extensions for the Configuration Manager Console. for details.

Tachyon Run Instruction command-line tool - installed as part of the Tachyon Toolkit, it is used for sending instructions to the Tachyon server from a script or from a command prompt.

Tachyon Product Pack Deployment Tool - included in the Tachyon platform zip ProductPacks folder.

TIMS - used for the development of instructions using the Tachyon SDK, refer to About TIMS for details.

A typical Tachyon license allows the use of all these tools.

Telemetry

Telemetry helps 1E to continually improve your experience with Tachyon. Only summarized statistical information is collected, which enables 1E to see how customers use features of the application. No personally identifiable data is collected. 1E use this information to:

Understand how the product is being used to influence future development decisions

Plan supported platforms (OS, SQL etc. versions) over time

Deliver a smooth upgrade experience as we can focus testing on implemented scenarios

Improve system performance

Identify early warning signs of potential issues such as excessive growth of database tables, instruction failures etc. so we can pro-actively address them.

Server telemetry reports how the platform is used and data is compressed, encrypted and sent to 1E through email on a configurable schedule. Full details of the Server telemetry data sent to 1E is provided in Server telemetry data. User Interface telemetry reports how the user interface is used, and data is sent directly from administrator browsers to the 1E Cloud.Please refer to Whitelisting connections to 1E Cloudfor details of URL that must be whitelisted for administrator browsers.

Telemetry features are configurable using Tachyon Setup during installation or upgrade, and can be enabled or disabled as a post-installation task. 1E encourages customers to enable sending telemetry.

Please also refer to Enabling or disabling Telemetry features.

Server sizing requirements

Objectives

The objective of this document is to outline prescriptive guidance for Tachyoncustomers implementing the solution, either on-premises servers, or in the Amazon AWS or Microsoft Azure cloud platforms.

There are many possible hardware server configurations, or cloud instance types and storage options, that customers can choose from, but the aim of this document is to recommend prescriptive guidance, and detailed configuration of the best performant implementation that customers, of varying client count sizes, should use to implement Tachyon.

This document focuses heavily on the SQL Server implementation and required storage for Tachyon, as this is singly the most performance demanding component of the system.

The default Tachyon configuration assumes, and recommends, a single Tachyon server and a separate remote SQL Server in a standard two server setup. There are sections for additional and complex implementations for separate Switch and Background Channels servers (DMZ), plus the extreme scenario where multiple Tachyon servers in a split, Response and Master Stack server configurations could be deployed, depending on the specific requirements for larger customers, for reasons of scale and higher availability.

Assumptions

The key assumption in this document for sizing guidelines is that the customer is using all components of Tachyon, that is, Explorer, Guaranteed State, Experience, Patch Success, Inventory, and Content Distribution.

The sizing guidelines are based on extensive testing of all components of the Tachyon platform, at different client count scales, using representative test data to simulate typical customer environments and their administrative and reporting usage.

Therefore, the following sizing guidelines should be used as the minimum hardware requirements for the specific numbers of managed clients in the respective size environments.

In terms of CPUs, the test environment used Intel Xeon E5-2687 v3 @3.1Hz, though any server-level CPU from 2016 should meet the performance needs for the cores specified in the following tables. Additional or newer and faster speed CPUs will improve performance, and the Tachyon platform will SQL Server will utilize all the CPU resources available.

In terms of data sizing, actual customer data and individual requirements will vary greatly. The data sizing is provided only as general guidance and assumes that some amount of storage will remain as free space, most of the time, when not at peak loads.

Performance load modelling

To simulate 100,000s of actual 1E Clients, 1E developed a Load Generation tool (loadgen) that maintains the same number of persistent connections and can respond with the same data responses as would actual real-world clients.

In reality, loadgen generates more data and creates greater data storms at the Tachyon servers than, offline and latent 1E Client responses would generate, so can be considered as producing a more extreme and worst-case scenario performance loads on the Tachyon servers.

Component | Feature | Assumptions |

|---|---|---|

Explorer | Instructions | An average of 1,000 instructions per day (including Synchronous instructions). Peak loads of 10 simultaneous small and some large instructions, targeted at both small and large device sets. Small instructions (<4K) can be received and processed from all online clients in less than 15 seconds. |

Guaranteed State | Policies | Around 20 deployed policies totaling 300 active rules and remediations. An average of up to 100 rule state changes per device per day. |

Experience | Events and metrics | Each device reports an average of 300 Device and Software Performance, User Interactions and Windows events per day. The Experience Dashboards and UI drill-down queries should be able to display and filter required data views, within a few minutes. |

Inventory (also known as SLA) | Hardware and software inventory | At least two connectors are configured (for example SCCM or BigFix) in addition to the Tachyon connector. An average of 200 software installations and 100 patches per device and up to 50,000 distinct software products, per 50k devices. Up to 50 distinct Management Groups per 50,000 Devices. |

Patch Success | Patch data | Clients process a maximum of 20 patch state changes per month for Windows and other related Microsoft Updates. |

Content Distribution | Downloads | For every 50,000 clients there are 100 sites containing around 2,500 distinct subnets. At peak loads (that is, during a critical patch deployment) there could be up to 20,000 content download requests (and their responses) per minute handled by the platform and content registrations. |

An overall assumption is that long duration and high impact batch processing operations, such as SLA inventory sync consolidations and Experience sync processing, are run primarily out of business hours (typically overnight) when there would be little potential impact on other Tachyon traffic and administrative interactive query and analytical reporting.

Using AI Powered Auto Curation with SLA Inventory Sync

Additional RAM is required if this feature is enabled, based on the total number of distinct software titles found in a specific customer environment. Please refer to the table detailed at AI Powered Auto-curation, which explains how to calculate the memory requirements. You must then add this to the figures in the following sizing tables.

On-premises installation

The Tachyon platform is recommended to be installed on a dedicated server, with a separate dedicated SQL Server, and can be installed on either virtual server or physical hardware.

For every 50,000 clients, Tachyon requires a separate instance of the Tachyon Switch component, which requires a dedicated Network Interface (NIC) for each switch instance. In a virtualized environment, this should a dedicated vNIC that preferably maps to a dedicated physical NIC, on the host.

Tachyon is a high-intensity database application and so requires a highly performant Microsoft SQL Server setup, with fast storage, and this is the most important component to size correctly. Storage could be presented locally, but it is expected that the more likely scenario is that this is presented from a customer Enterprise Storage Area Network (SAN), or cloud-based managed storage.

As per Microsoft SQL Server best practice, 1E recommends to provision at least three separate disk volumes for SQL Data, Logs, and TempDB. These volumes may be made up from multiple dedicated disks, striped in a RAID Volume for both resilience and performance, for example RAID 10, depending on the standard operational configuration of the customer’s on-premises storage sub-systems.

Data and log drives disk volumes should be formatted to use 64 KB allocation unit size. It is assumed the customer is using fast SSD based storage to achieve the necessary disk throughput in MB/s and IOPS, at least 6Gb/s SATA, but preferably 12Gb/s SAS or NVMe drives at higher scale.

Microsoft SQL Server should be configured according to the Microsoft best practice documentation. The default installation of SQL Server 2017 or later, will make automatic configuration settings for memory, TempDB, and processing parallelism. However, the best single index to overall Microsoft recommendations for SQL Server can be found at https://docs.microsoft.com/en-us/sql/relational-databases/performance/performance-center-for-sql-server-database-engine-and-azure-sql-database?view=sql-server-ver15.

Note

One setting to make over and above the SQL Server installation defaults is to set Maximum SQL Server Memory to a value that reserves some memory at the server for the Operating System itself, and any additional running processes. A reasonable rule of thumb to use, for a dedicated SQL Server configuration as defined in the following server tables, would be to set Maximum SQL Server Memory to 85% of total memory of the server.

Cloud based SQL Database deployment templates, may configure most, but not all, memory optimizations automatically, but some of the key recommendations to note, are as follows.

Server memory configuration options (https://learn.microsoft.com/en-us/sql/database-engine/configure-windows/server-memory-server-configuration-options)

Configure the max degree of parallelism Server Configuration Option (https://learn.microsoft.com/en-us/sql/database-engine/configure-windows/configure-the-max-degree-of-parallelism-server-configuration-option)

optimize for ad hoc workloads server configuration option (https://learn.microsoft.com/en-us/sql/database-engine/configure-windows/optimize-for-ad-hoc-workloads-server-configuration-option)

In addition, it is essential that a Backup and Maintenance strategy of SQL server must be defined, and backup and maintenance jobs configured accordingly. These must be run during low peak hours or when server is not in use (such as over-night or weekends) and should include the following.

At least weekly full backups and then daily differentials, as required

Daily Update statistics job and weekly Rebuild index followed by DBCC Checkdb

SQL Server Analysis Services (SSAS)

In addition to SQL Server databases, Patch Success requires a SSAS instance, in multi-dimensional mode, that can be co-located with SQL Server Database installation, using the same data and log drives. The disk space required for the SSAS cubes is typically only 10’s of MBs, so can largely be ignored in terms of disk space sizing.

SSAS settings and configuration can be left at the installation defaults, but additional best practice performance optimizations for SSAS can be found in the following Microsoft guidance at http://download.microsoft.com/download/d/2/0/d20e1c5f-72ea-4505-9f26-fef9550efd44/analysis%20services%20molap%20performance%20guide%20for%20sql%20server%202012%20and%202014.docx

When SSAS is being used, set Memory Limit Low: 90% and SSAS Memory Limit High: 95%. Both of these settings can be set at the Server properties via SQL Server Management Studio.

Small server sizing (<10K clients, on-premises and cloud)

For small systems with less than 10,000 seat counts and Proof-of-Concept installations, it is allowable for simplicity for all the Tachyon server components and SQL Server to be installed onto a single server - though it should be noted that this may mean higher SQL per core licensing costs than a split two server installation.

In a single server, as per Microsoft SQL Server best practice, it is still recommended to have a minimum of 3 separate disks (OS Drive, SQL DB and Logs, and TempDB) to gain optimum disk performance.

Platform | On-Premises | Microsoft Azure | Amazon AWS |

|---|---|---|---|

Devices | Up to 10,000 | Up to 10,000 | Up to 10,000 |

Server Type | Physical or Virtual | Standard E4ds v4 | R5.xlarge |

CPU Cores | 4 | 4 | 4 |

RAM | 32 GB | 32 GB | 32 GB |

Tachyon Switches | 1 | 1 | 1 |

NICs | 1 | 1 | 1 |

Disks | |||

OS Drive disk size | 64 GB | 64 GB | 64 GB |

DB and Logs disk size | 500 GB | 500 GB | 500 GB |

MBs/IOPS | 250/15,000 | 170/3,500 | 250/16,000 |

TempDB disk size | 150 GB | 150 GB | 180 GB |

MBs/IOPS | 250/15,000 | 242/38,500 | 250/16,000 |

Note

For a combined single-server installation, Maximum SQL Server Memory max memory should be capped at 50% of available memory, to ensure the set of Tachyon databases has its own, dedicated pool of memory.

IOPS is calculated at standard 16k Block Size, although SQL Data volumes/disks should be formatted in Windows at 64k allocation units, according to Microsoft SQL Server best practice guidance.

For Cloud implementations, to achieve the required disk throughput, the above assumes using premium storage SSD based disks. For example, for Azure use Premium SSDs, and for AWS use EBS gp3 volumes.

Medium and large on-premises design patterns

Medium 1 | Medium 2 | Large 1 | Large 2 | Large 3 | |

|---|---|---|---|---|---|

Devices | 25,000 | 50,000 | 100,000 | 200,000 | 500,000 |

Tachyon server | |||||

CPU Cores | 4 | 8 | 16 | 32 | 63 |

RAM | 16 GB | 32 GB | 64 GB | 128 GB | 256 GB |

Tachyon Switches | 1 | 1 | 2 | 4 | 10 |

NICs | 2 | 2 | 3 | 5 | 11 |

Remote SQL Server | |||||

CPU Cores | 4 | 8 | 16 | 24 | 64 |

RAM | 32 GB | 64 GB | 128 GB | 256 GB | 512 GB |

Disks | |||||

DB Size | 500 GB | 1,000 GB | 2,000 GB | 4,000 GB | 8,000 GB |

MBs/IOPS | 250/15,000 | 500/30,000 | 1,000/60,000 | 2,000/120,000 | 4,000/240,000 |

Logs Size | 100 GB | 200 GB | 500 GB | 1,000 GB | 2,000 GB |

MBs/IOPS | 170/10,000 | 250/15,000 | 500/30,000 | 1,000/60,000 | 2,000/120,000 |

TempDB Size | 150 GB | 300 GB | 600 GB | 1,200 GB | 2,000 GB |

MBs/IOPS | 250/15,000 | 500/30,000 | 1,000/60,000 | 2,000/120,000 | 4,000/240,000 |

Note

Customers with intervening seat counts should choose the closest higher number. For example, a 150,000-seat implementation should be treated as Large 2 rather than Large 1.

IOPS is calculated at standard 16k Block Size, although SQL Volumes should have a 64k block size, and disks/volumes should be formatted in Windows at 64k allocation units, according to Microsoft best practice guidance.

Network considerations

A server hosting a Tachyon Response Stack requires a dedicated network interface for each Switch, and for the connection to the remote SQL Server, to keep incoming traffic from clients separate from the outgoing traffic to the Response and other Tachyon SQL databases. It is expected that this Server-to-Server network traffic, would be over a Data Center backbone network of 10Gb or more.

Accelerated Networking which provides enhanced NIC performance, using Receive Side Scaling (RSS) should be enabled on all NICs. Please refer to https://docs.microsoft.com/en-us/windows-hardware/drivers/network/introduction-to-receive-side-scaling.

Also, its better performant to increase the transmit (TX) and receive (RX) buffer sizes to their maximum under the Windows network adaptor advanced properties.

Microsoft Azure

Out of scope

This document only focuses on implementing Tachyon components and SQL Server on individual Azure VMs, and configurations using Azure Premium storage.

It doesn't consider using Microsoft SQL as part of Azure Platform as a Service (PAAS) offerings, either SQL Server Managed Instances or Native Azure SQL (https://docs.microsoft.com/en-us/azure/azure-sql/azure-sql-iaas-vs-paas-what-is-overview) as these solutions are not currently supported by 1E as a means to implement SQL Server for Tachyon.

In addition, it does not consider using Azure instances that do not rely on Azure premium storage, but have local NVMe drives, like the Lsv2-series. Although these instance types have very high performing storage and data transfer bandwidth, the NVMe disks are ephemeral or non-persistent, so only practical for single SQL Server instance for TempDB.

In the future, 1E plan to provide guidance in using non-persistent storage solutions or SQL Business Critical Managed Instances, as part of a SQL Server Always on Availability Group cluster, that would provide storage resilience and redundancy as described in https://docs.microsoft.com/en-us/sql/database-engine/availability-groups/windows/overview-of-always-on-availability-groups-sql-server?view=sql-server-ver15. However, since these solutions require a cluster of minimum 3 nodes, it should be noted that these solutions would be much more expensive to implement than individual VMs.

Azure VM Selection

This document should be read in conjunction with the Azure documentation, especially with the guidance on Maximizing Microsoft SQL Server Performance with on Azure VMs https://docs.microsoft.com/en-us/azure/azure-sql/virtual-machines/windows/performance-guidelines-best-practices.

Azure Premium storage recommendations for SQL Server workloads are also detailed more completely in https://docs.microsoft.com/en-us/azure/virtual-machines/premium-storage-performance#optimize-IOPS-throughput-and-latency-at-a-glance.

Based on these factors, 1E recommend using Azure Dsv4 VMs for the Tachyon server and Edsv4-series VMs for SQL Server, to achieve the optimum ratio of vCPU and memory count for the separate requirements.

Dsv4 and Edsv4-series sizes run on the Intel® Xeon® Platinum 8000 series (Cascade Lake) processors. In addition, the Edsv4 virtual machine sizes feature up to 504 GiB of RAM, in addition to fast and large local SSD storage (up to 2,400 GiB). These virtual machines are ideal for memory-intensive enterprise applications and applications that benefit from low latency, high-speed local storage in the following specifications.

Dsv4 Series | |||||||

|---|---|---|---|---|---|---|---|

Size | vCPU | Memory: GiB | Temp storage (SSD) GiB | Max data disks | Max uncached disk throughput: IOPS/MBps | Max NICs | Expected Network bandwidth (Mbps) |

Standard_D2s_v4 | 2 | 8 | 0 | 4 | 3200/48 | 2 | 1000 |

Standard_D4s_v4 | 4 | 16 | 0 | 8 | 6400/96 | 2 | 2000 |

Standard_D8s_v4 | 8 | 32 | 0 | 16 | 12800/192 | 4 | 4000 |

Standard_D16s_v4 | 16 | 64 | 0 | 32 | 25600/384 | 8 | 8000 |

Standard_D32s_v4 | 32 | 128 | 0 | 32 | 51200/768 | 8 | 16000 |

Standard_D48s_v4 | 48 | 192 | 0 | 32 | 76800/1152 | 8 | 24000 |

Standard_D64s_v4 | 64 | 256 | 0 | 32 | 80000/1200 | 8 | 30000 |

Azure VM constrained core CPU options

At some VM sizes, it is possible to reduce the CPU count to therefore lower SQL license requirements, whilst maintaining the higher storage throughput of the VM. Selecting constrained core SQL VMs, specifically to get the higher required storage throughput, although with the same VM and OS license pricing, will provide half the actual presented vCPU core count, but therefore minimise SQL Server license costs.

Please refer to https://learn.microsoft.com/en-us/azure/virtual-machines/constrained-vcpu.

Increasing Azure SQL Server Performance

The latest Azure Ev5 series VMs provides up to three times an increase in remote storage performance compared with previous generations: https://azure.microsoft.com/en-us/blog/increase-remote-storage-performance-with-azure-ebsv5-vms-now-generally-available/.

For larger scale Tachyon environments, achieving the best storage throughput provides the best performance for long running data consolidation operations.

In addition, the latest Ev5 Series VMs support disk busting which provides the ability to boost disk storage IOPS and MB/s performance: https://learn.microsoft.com/en-us/azure/virtual-machines/disk-bursting

On-demand disk bursting in Azure is only available for premium disks of 1TB in size or greater (P30), but by enabling this on SQL data disk(s), allows you to achieve the maximum storage throughput available to the VM on high-intensity operations: https://learn.microsoft.com/en-us/azure/virtual-machines/disks-enable-bursting.

Azure prescriptive design patterns

Medium 1 | Medium 2 | Large 1 | Large 2 | Large 3 | |

|---|---|---|---|---|---|

Devices | 25,000 | 50,000 | 100,000 | 200,000 | 500,000 |

Tachyon server | |||||

VM type | Std_D4s_v4 | Std_D8s_v4 | Std_D16s_v4 | Std_D32s_v4 | Std_D64s_v4 |

CPU Cores | 4 | 8 | 16 | 32 | 64 |

RAM | 16 GB | 32 GB | 64 GB | 128 GB | 256 GB |

Tachyon Switches | 1 | 1 | 2 | 4 | 10 |

NICs | 2 | 2 | 3 | 5 | 11* |

Remote SQL Server | |||||

VM Type | Std E4ds v4 | Std_E8ds v4 | Std E16ds v4 | Std_E20ds_v4 | Std_E64ds_v4 |

CPU Cores | 4 | 8 | 16 | 20 | 64 |

RAM | 32 GB | 64 GB | 128 GB | 160 GB | 504 GB |

Max MB/s | 96 | 192 | 384 | 480 | 1,200 |

Max IOPS | 6,400 | 12,800 | 25,600 | 32,000 | 80,00 |

DB Size | 500 GB | 1,000 GB | 2,000 GB | 4,000 GB | 8,000 GB |

MBs/IOPS | 170/3,500 | 340/7,000 | 400/10,000 | 800/20,000 | 1,600/40,000 |

Logs Size | 128 GB | 256 GB | 500 GB | 1,000 GB | 2,000 GB |

MBs/IOPS | 170/3,500 | 170/3,500 | 170/3,500 | 200/5,000 | 250/7,500 |

TempDB Size | 150 GB | 300 GB | 600 GB | 750 GB | 2,000 GB |

MBs/IOPS | 242/38,500 | 485/77,000 | 968/154,000 | 1,211/193,000 | 3,872/615,000 |

Note

For customers with inter-meaning seats count, they should choose the closest higher number. For example, a 150,000-seat implementation should be treated as Large 2 rather than Large 1.

As noted above, to reach the required throughput in MBs, for the data drive especially, multiple P20, P30 or P40 Premium disks should be used and these disks, striped using Windows Storage space to present a single volume.

The high MBs/IOPS for the TempDB drives is because they are using the built-in high throughput of the fast, local SSD based (non-persistent) storage that the Edsv4-series provides.

Azure network considerations

A server hosting a Tachyon Response Stack requires a dedicated network interface for each of its Switches, and for the connection to the remote SQL Server instance used for the Responses database to keep incoming traffic from clients separate from the outgoing traffic to the Response database.

Accelerated Networking which provides enhanced NIC performance using SR-IOV should be enabled on the SQL VM Network Interface and Platform VM Network Interface that communicates with it. Detailed steps on how to configure this are given here https://docs.microsoft.com/en-us/azure/virtual-network/create-vm-accelerated-networking-powershell.

Also increase the transmit (TX) and receive (RX) buffer sizes to their maximum under the network adaptor advanced properties.

If connecting to an Azure based Tachyon Switch via external public Azure IP addresses or Azure Load Balancers you will need to extend the default TCP timeout from 4 minutes to 15 minutes. Detailed steps on how to do this can be found here https://azure.microsoft.com/en-us/blog/new-configurable-idle-timeout-for-azure-load-balancer.

Amazon AWS

Out of scope

This document only focuses on AWS elastic cloud (EC2) instances and configurations using AWS Elastic Block Storage (EBS).

It does not consider using Microsoft SQL as part of AWS Platform as a Service (PAAS) offerings such as Amazon Relational Database Service (RDS) (https://aws.amazon.com/rds/) as this not currently supported by 1E as a means to implement SQL Server for Tachyon.

In addition, it does not consider using AWS instances that do not use the EBS platform but have local NVMe based storage such as I3en and R5d instance types.

Although these instance Types have very high performing storage and data transfer bandwidth, the NVMe disks are ephemeral or non-persistent, so only practical for SQL Server use for TempDB.

In the future, 1E plan to provide guidance in using non-persistent storage solutions as part of a SQL Server Always on Availability Group cluster, that provides storage resilience and redundancy as described in https://learn.microsoft.com/en-us/sql/database-engine/availability-groups/windows/overview-of-always-on-availability-groups-sql-server. However, since these solutions require a cluster of minimum 3 nodes, it should be noted these solutions would be much more expensive to implement.

AWS instance selection

This document should be read in conjunction with the AWS documentation, especially with guidance on Maximizing Microsoft SQL Server Performance with Amazon EBS https://aws.amazon.com/blogs/storage/maximizing-microsoft-sql-server-performance-with-amazon-ebs.

In recommending the desired AWS instance type and size 1E, has followed the following guidance.

Instances are based on the latest AWS Nitro Systems https://aws.amazon.com/ec2/nitro

Amazon EBS Optimized systems were selected, so that these can have the best possible storage throughput performance in terms of MB/s and IOPS https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ebs-optimized.

Based on these factors 1E recommend using the EC2 M5 instances for the Tachyon server and R5 instances for the SQL server, to get the optimum ratio of vCPU and memory count.

EC2 M5/R5 instances have 3.1 GHz Intel Xeon® Platinum 8000 series processors with new Intel Advanced Vector Extension (AVX-512) instruction set and following specifications.

Instance Size | vCPU | Memory (GiB) | Instance Storage(GiB) | Network Bandwidth (Gbps) | EBS Bandwidth (Mbps) |

|---|---|---|---|---|---|

m5.large | 2 | 8 | EBS-Only | Up to 10 | Up to 4,750 |

m5.xlarge | 4 | 16 | EBS-Only | Up to 10 | Up to 4,750 |

m5.2xlarge | 8 | 32 | EBS-Only | Up to 10 | Up to 4,750 |

m5.4xlarge | 16 | 64 | EBS-Only | Up to 10 | 4,750 |

m5.8xlarge | 32 | 128 | EBS Only | 10 | 6,800 |

m5.12xlarge | 48 | 192 | EBS-Only | 12 | 9,500 |

m5.16xlarge | 64 | 256 | EBS Only | 20 | 13,600 |

m5.24xlarge | 96 | 384 | EBS-Only | 25 | 19,000 |

AWS instance CPU options

The above table shows the default number of vCPUs provisioned for a specific AWS instance type at creation time.

Amazon EC2 instances support multi-threading, which enables multiple threads to run concurrently on a single CPU core. By disabling hyper-threading, it is possible to get less vCPUs (and therefore lower SQL license requirements) whilst maintaining the higher storage throughput. Please refer to https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/instance-optimize-cpu.

AWS EBS storage selection

For any solution based on Microsoft SQL Server, storage throughput is normally the major bottleneck to performance, and Tachyon is no exception to this.

The maximum storage throughput in terms of MB/s and IOPS for specific AWS M5 instances is detailed in the table below and this will determine the maximum equivalent IOPS for the selected AWS storage volumes.

For example, if you attach a single 20,000-IOPS volume to an r5.4xlarge instance, you reach the instance limit of 18,750 IOPS before you reach the volume limit of 20,000 IOPS.

Instance size | Maximum storage bandwidth (Mbps) | Maximum throughput (MB/s, 128 KiB I/O) | Maximum IOPS (16 KiB I/O) |

|---|---|---|---|

r5.large | 4,750 | 593.75 | 18,750 |

r5.xlarge | 4,750 | 593.75 | 18,750 |

r5.2xlarge | 4,750 | 593.75 | 18,750 |

r5.4xlarge | 4,750 | 593.75 | 18,750 |

r5.8xlarge | 6,800 | 850 | 30,000 |

r5.12xlarge | 9,500 | 1,187.5 | 40,000 |

r5.16xlarge | 13,600 | 1,700 | 60,000 |

r5.24xlarge | 19,000 | 2,375 | 80,000 |

The recommended AWS EBS storage type is Provisioned IOPS SSD (io1 and io2) volumes. These (io1 and io2) SSD volumes are designed to meet the needs of I/O-intensive workloads, particularly database workloads, that are sensitive to storage performance and consistency.

Unlike General Purpose EBS Storage (gp2), which uses a bucket and credit model to calculate performance, io1 and io2 volumes allow you to specify a consistent IOPS rate when you create volumes, and Amazon EBS delivers the provisioned performance 99.9 percent of the time. For more details see https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ebs-volume-types.html.

As per Microsoft SQL Server best practice, 1E recommends to provision at least three separate AWS disk volumes for SQL Data, Logs and TempDB. These volumes may be made up from multiple Io1 disks, striped in a Basic Array using Windows Storage Spaces to create a single higher performance volume, with the combined IOPS of all individual disks in the Storage Pool. For an example of this, see https://d1.awsstatic.com/whitepapers/maximizing-microsoft-sql-using-ec2-nvme-instance-store.pdf.

The configured volumes should have the combined MBs/IOPS throughput, equal to or above, the total MBs/IOPS supported by the given instance type, to gain maximum storage throughput performance possible for the given instance type.

Increasing AWS SQL Server Performance

The latest AWS R5b EC2 instances are specifically designed for larger SQL Server deployments that require higher EBS performance per instance. R5b instances deliver up to 60 Gbps bandwidth and 260K IOPS of EBS performance, the fastest block storage performance on EC2: https://aws.amazon.com/blogs/compute/sql-server-runs-better-on-aws/.

For larger scale Tachyon environments, achieving the best storage throughput provides the best performance for long running data consolidation operations.

In addition, the latest Amazon EC2 R5b Instances benefit from the highest block storage performance with a single storage volume using io2 Block Express: https://aws.amazon.com/ebs/provisioned-iops/.

AWS R5b instances and block express EBS storage allows you to achieve the maximum storage throughput available to the VM for high-intensity SQL Server operations.

AWS EC2 prescriptive design patterns

Medium 1 | Medium 2 | Large 1 | Large 2 | Large 3 | |

|---|---|---|---|---|---|

Max devices | 25,000 | 50,000 | 100,000 | 200,000 | 500,000 |

Tachyon server | |||||

AWS Instance | M5.xLarge | M5.2xlarge | M5.4xlarge | M5.8xlarge | M5.16xLarge |

CPU Cores | 4 | 8 | 16 | 32 | 64 |

RAM | 16 GB | 32 GB | 64 GB | 128 GB | 256 GB |

Switches | 1 | 1 | 2 | 4 | 10 |

NICs | 2 | 2 | 3 | 5 | 11 |

Remote SQL Server | |||||

AWS Instance | R5.xlarge | R5.2xlarge | R5.4xlarge | R5.12xlarge | R5.16xLarge |

CPU Cores | 4 | 8 | 16 | 24* | 64 |

RAM | 32 GB | 64 GB | 128 GB | 384 GB | 512 GB |

Max MB/s | 593.75 | 593.75 | 593.75 | 1,187.5 | 1,700 |

Max IOPS | 18,750 | 18,750 | 18,750 | 40,000 | 60,000 |

DB Disk Size | 500 GB | 1,000 GB | 2,000 GB | 4,000 GB | 8,000 GB |

DB Disk IOPS | 10,000 | 10,000 | 12,000 | 24,000 | 48,000 |

Logs Disk Size | 150 GB | 300 GB | 600 GB | 1,200 GB | 2,000 GB |

Log Disk IOPS | 5,000 | 5,000 | 6,000 | 8,000 | 16,000 |

TempDB Size | 150 GB | 300 GB | 600 GB | 1,200 GB | 2,000 GB |

TempDB IOPS | 6,000 | 6,000 | 8,000 | 10,000 | 20,000 |

Note

Customers with intervening seats count, should choose the closest higher number. For example, a 150,000-seat implementation should be treated as Large 2 rather than Large 1.

The Large 2 sizing (*) uses a larger Instance size to get the necessary storage throughput but assumes disabling hyper-threading in the instance to reduce the vCPU count, and therefore, SQL license costs.

AWS network considerations

A server hosting a Tachyon Response Stack requires a dedicated network interface for each of its Switches, and for the connection to the remote SQL Server instance used for the Responses database to keep incoming traffic from clients separate from the outgoing traffic to the Response database.

Enhanced networking (SR-IOV) must be enabled on both NICs which should be the default. Also increase the transmit (TX) and receive (RX) buffer sizes to their maximum under the network adaptor advanced properties. Detailed steps on how to verify this are detailed here https://docs.aws.amazon.com/AWSEC2/latest/WindowsGuide/sriov-networking.

Additional and complex configurations

Tachyon Switch and Background Channel servers (DMZ)

In some customer environments, with network segmentation, it may be beneficial to separate the Switch infrastructure servers from other Tachyon component servers so that clients connect to a more network local server, whilst the platform and SQL Servers reside in a more central datacenter subnet.

This same model is true for a DMZ environment, where remote clients only connect to Switch and Background Channel components in an intentionally separated DMZ environment and subnet.

Tachyon Setup (DMZ installation) can be used to install Tachyon components for required for client connectivity only, that is, the Switch and Background Channel (BGC).

Servers with only Switch and BGC components will have lesser memory, CPU core and storage requirements than a full Tachyon server, but the following requirements should be noted:

As stated above, a new instance of the Switch is required for every 50k clients with a dedicated NIC of at least 1GBps speed. This network IP may be shared with the BGC.

To support additional client connection count numbers, for every 50k clients, a separate dedicated NIC and additional instance of the Switch and separate IP address will be required.

An additional internal facing interface is required for outgoing response traffic from Switch(s) on the Switch/DMZ Server to the internal Response Stack. This also should have a minimum speed of 1Gbps, or higher if hosting multiple switch instances.

Platform | On-premises | Microsoft Azure | Amazon AWS |

|---|---|---|---|

Devices | Up to 50,000 | Up to 50,000 | Up to 50,000 |

Server Type | Physical or Virtual | Std_D4s_v4 | M5.xlarge |

CPU Cores | 4 | 4 | 4 |

RAM | 16 GB | 16 GB | 16 GB |

Switches | 1 | 1 | 1 |

NICs | 2 | 2 | 2 |

Devices | Up to 100,000 | Up to 100,000 | Up to 100,000 |

Server Type | Physical or Virtual | Std_D8s_v4 | M5.2xlarge |

CPU Cores | 8 | 8 | 8 |

RAM | 32 GB | 32 GB | 32 GB |

Switches | 2 | 2 | 2 |

NICs | 3 | 3 | 3 |

Devices | Up to 200,000 | Up to 200,000 | Up to 200,000 |

Server Type | Physical or Virtual | Std_D16s_v4 | M5.4xlarge |

CPU Cores | 16 | 16 | 16 |

RAM | 64 GB | 64 GB | 64 GB |

Tachyon Switches | 4 | 4 | 4 |

NICs | 5 | 5 | 5 |

Separate Master and Response Stacks

The standard Tachyon installation assumes all components are installed on a single server, with databases hosted on a remote dedicated SQL Server.

However, it is also supported to split some of Tachyon components between multiple servers - in this configuration creating a Tachyon Master Stack server (Coordinator Service, Consumer API, Experience and SLA Inventory components) and one or more Tachyon Response Stack servers (Switch, Background Channel and Core components).

Tachyon Setup has different configuration options, to support these installation types, installing first a Master Stack and then separate Response Stack servers.

A split-server configuration would only really be practical in large scale environments, but does have a couple of advantages over installing all components on a single server:

Spread the H/W requirements into multiple, smaller servers: If the required VM sizes (CPU and Memory or of NICs) is greater than the capabilities of the virtual host, then multiple smaller sized VMs may be more practical in some environments.

Provide some Resilience and fault-tolerance for the Platform: Multiple and redundant Response Stack servers, would allow for the failure of a single Response Server VM, where all clients fall over to the remaining server and it's Switch instances in single VM failure.

Multiple Response Stacks can provide higher performance throughput than a single server, but at the cost of increased total vCPU and Ram for the platform, across multiple VMs.

To achieve resilience, it would assume the number of Switches configured and total capacity of a single Response server, is matched to the total load of all required client connections. This may mean at least three response server installations are required, so that two remaining response servers can handle all the required number of active connections, if one VM fails.

In terms of resilience, it should be noted that multiple redundant Response Servers only provide resilience in the failure of one of the redundant Response server VMs – if the separate and single Master Stack VM (or SQL Server) fails, then the entire Tachyon solution would be unavailable.

Overall resilience and high-availability would best be enabled using the standard high-availability features built-in to the chosen virtualization platform and at the storage system level (on-premises), or if in the cloud, through their specific high-availability and disaster recovery options.

The rule that for every 50,000 clients, Tachyon requires a separate instance of the Switch component, which requires a dedicated Network Interface (NIC), still applies for Response Servers, as they will host switch instance(s) alongside the Tachyon Core component.

If deploying separate Response and Master Stack servers, it is still recommended installing all Tachyon databases on a separate and dedicated SQL Server, so as not to mix a SQL Server installation with IIS other and web server components, as per Microsoft best practice. Therefore, the VM sizing guidelines, disk throughput and storage requirements for SQL Server VM will be identical, if either a single or split platform server configuration. Please refer to the above sections for SQL Server sizing, using specific total client counts required for the environment.

Response Stack server design patterns

With Response Stack servers, there are various configuration sizes that could be used to support the required maximum number of active clients, depending on required resilience or redundancy.

The basic formula for sizing a separate Response server is to allocate at least 8x vCPU cores and 16GB of memory for a maximum of 50,000 active clients reporting to that server.

Response Servers | ||||

|---|---|---|---|---|

Maximum Clients | 25,000 | 50,000 | 100,000 | 200,000 |

CPU Cores | 4 | 8 | 16 | 32 |

RAM | 12 GB | 24 GB | 48 GB | 96 GB |

Switches | 1 | 1 | 2 | 4 |

NICs | 2 | 2 | 3 | 5 |

Note

When planning for resiliency, there should be enough capacity in the individual Response Stack servers to allow for at least 1 VM server to fail, but the remaining VMs to have the capacity to support the total number of required connections.

Master Stack server design patterns

The basic formula for sizing a separate Master Stack server is to allocate at least 2x vCPU cores and 16GB of memory for every 50,000 active clients in the environment.

The following table details the VM CPU, Memory and NIC requirements for example individual Response servers, supporting a max total number of active clients in the environment.

Master Stack Servers | ||||

|---|---|---|---|---|

Maximum Clients | 50,000 | 100,000 | 200,000 | 400,000 |

CPU Cores | 2 | 4 | 8 | 16 |

RAM | 16 GB | 32 GB | 64 GB | 128 GB |

NICs | 1 | 1 | 1 | 1 |

Note

Although the multiple (and potentially redundant) Response Stack servers can support more clients, the total number of active clients across all the Response Stack servers will always stay the same.

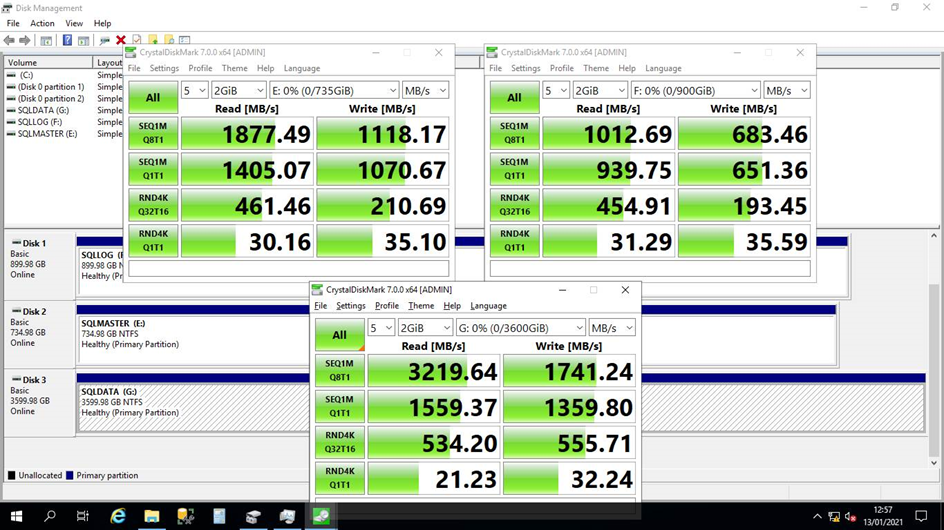

How we measure disk performance

There are a number of third party tools to measure disk and storage performance but the most commonly used and referenced by various hardware providers, is CrystalDiskMark (https://crystalmark.info/en/software/crystaldiskmark).

An example of the output from CrystalDiskMark is shown below, where the drives consist of an array of Samsung Pro 850, 6 Gb/s SATA SSDs.

Under the hood, CrystalDiskMark uses the Microsoft tool Diskspd (https://github.com/microsoft/diskspd/releases) which can be useful to run on its own to get a simple and reproducible way to measure different server and VM disk subsystem performance.

Use the following command line to test each of the relevant SQL volumes, by drive letter, in turn.

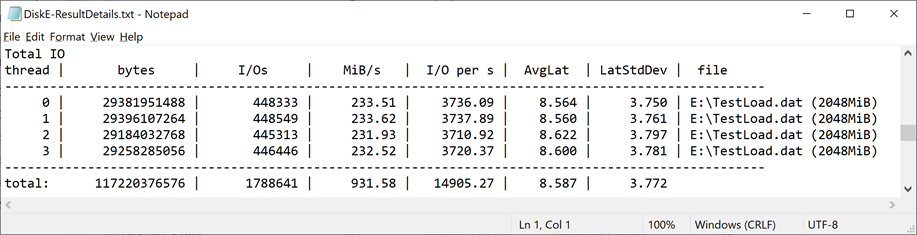

Diskspd -b64k –d120 –o32 –t4 –h –r –w25 –L –c2G G:\TestLoad.dat > GDisk_resultdetails.txt

Once complete, review the output in the created results file GDisk_resultdetails.txt for the Total IO thread section. In the following example, the total throughput in MiB/s is measured at 931.58.

Tachyon is more akin to a Data Warehouse type SQL Application (large sequential Writes and Reads) and not an OLTP type system (millions of small random IO requests). Therefore, overall throughput (MB/s) is more important than just IO alone. Also, when comparing IOPS in the sizing tables above (16k), and Diskspd at 64K block size, x4 to equate the values.